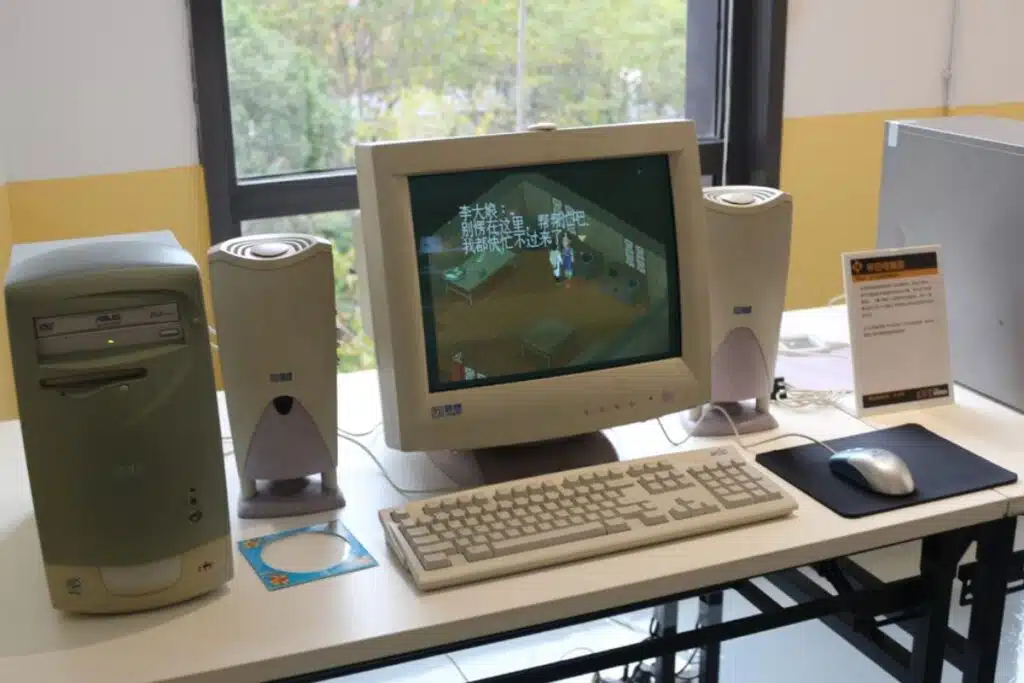

Contrary to popular belief that AI demands ultra-powerful machines, researchers from EXO Labs and Oxford University have successfully run a modern AI language model on a 1997 Intel Pentium II computer with only 128 MB of RAM. This experiment shows that advanced AI can run on modest, even obsolete, hardware.

The secret lies in a new neural network architecture called BitNet, which uses ternary weights (-1, 0, 1) instead of traditional floating-point numbers.

This drastically compresses the model, reducing a typical 7-billion-parameter AI down to just 1.38 GB, allowing it to run on a slow 350 MHz processor at a rate of about 39 tokens per second.

This breakthrough means models with up to 100 billion parameters might one day run efficiently on single CPUs, challenging the assumption that AI requires expensive GPUs or cloud computing.

Beyond the technical achievement, this could transform AI access worldwide. Lower hardware demands mean AI tools could be used in developing countries, schools, clinics, and small businesses without costly infrastructure. It also supports sustainability by repurposing old devices, reducing electronic waste and energy use.

This experiment signals a shift in AI’s future—from focusing solely on raw hardware power to valuing algorithmic efficiency and software innovation—enabling AI that is more inclusive, affordable, and environmentally responsible.

Leave a comment