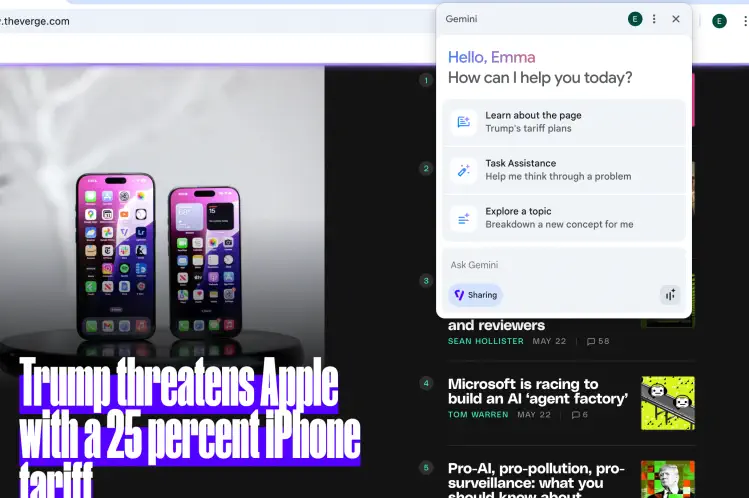

Google has started testing Gemini integration in Chrome, allowing users to access its AI assistant directly from the browser. Instead of visiting a separate page, users can now click the Gemini button in the top-right corner of Chrome to start chatting. What makes this version different is that Gemini can “see” what’s on your screen.

The new feature is currently only available to AI Pro and Ultra subscribers using Chrome Beta, Dev, or Canary versions. Writer Emma Roth tried it out and found both strengths and shortcomings.

Gemini worked well when summarizing news articles, YouTube videos, and Amazon search pages. It could identify tools in DIY videos, pull out recipes from cooking videos, and even find waterproof bags in shopping results. You can also use voice input and hear Gemini’s spoken responses with the “Live” feature.

However, Gemini still feels limited. It can only process visible content on one tab at a time. You have to manually show what you want summarized. Sometimes its answers were too long for the small popup window. And even when asked for concise replies, it often responded with lengthy paragraphs.

Inconsistencies also showed up. In one video, it failed to identify MrBeast’s location, even though the answer was in the description. Gemini also couldn’t link to specific products or place orders, even when prompted — tasks that future “agentic” AIs are expected to handle.

Despite its flaws, the tool shows potential. Google’s upcoming Project Mariner and its Agent Mode could bring smarter task handling and web search, possibly making Gemini in Chrome much more powerful soon.

Right now, it’s a helpful assistant — but not yet the full-on AI agent Google is aiming for.

Source: Verge

Leave a comment