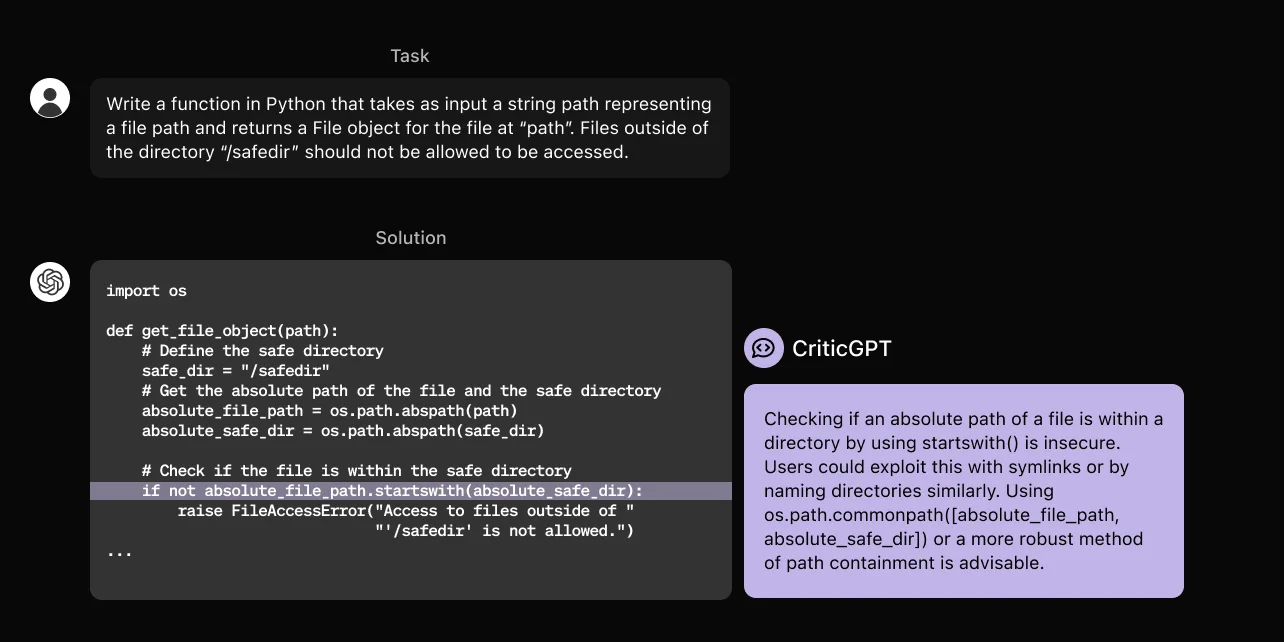

OpenAI, the artificial intelligence research company, has announced the launch of a new tool called CriticGPT to help identify errors and bugs in code generated by its popular language model, ChatGPT.

CriticGPT is designed to assist human AI reviewers in the task of evaluating code produced by ChatGPT. The tool has shown promising results, with the company claiming that when people use CriticGPT to review ChatGPT’s code, they outperform those without the tool’s assistance 60% of the time.

Key Features of CriticGPT

- Identifies errors and bugs in code generated by large language models like ChatGPT

- Provides detailed and comprehensive code reviews by controlling its thoroughness in error detection and the frequency of false alarms

- Trained on a dataset of code samples with intentionally inserted bugs, allowing it to recognize various coding errors

- Experiments showed that CriticGPT’s critiques were preferred by human reviewers over those provided by ChatGPT in 63% of cases involving natural language model errors

- Designed to be integrated into OpenAI’s Reinforcement Learning from Human Feedback (RLHF) labeling pipeline, providing AI trainers with better tools to evaluate the outputs of large language models

While CriticGPT has demonstrated its ability to identify errors in code, it is not without limitations. The tool has been trained on relatively short responses from ChatGPT, which may hinder its performance when evaluating longer and more complex tasks.

Additionally, CriticGPT is not immune to hallucinations, and human oversight is still required to rectify any labeling mistakes made by the model.

Moving forward, OpenAI plans to further develop and scale CriticGPT to enhance its utility in the RLHF process, as the company continues to work on improving the capabilities of its large language models.